In my previous posts I covered the existing Openstack virtual L3 implementations, from the base centralized implementation to the state of the art solution, DVR, that distributes the load among the computes nodes.

I also summarized the limitations of DVR and hopefully convinced you of the motivation for yet another L3 implementation in Neutron.

Just to quickly recap, the approach for DVR’s design was to take the existing centralized router implementation based on Linux network namespaces and clone it on all compute nodes. This is an evolutionary approach and a reasonable step to take in distributing L3 from which we've learned a lot. However, this solution is far form optimal.

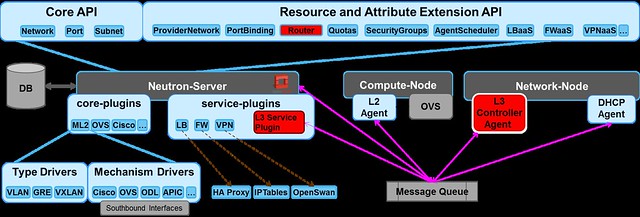

In this post I’m going to present a PoC implementation of an embedded L3 controller in Neutron that solves the same problem (the network node bottleneck) the SDN way, and overcomes some of the DVR limitations.

I chose to start with just the East-West traffic, and leave the North-South for the next step.

I will share some of my ideas for North-South implementation in upcoming posts, as well as links to the Stackforge project.

If you're interested in joining the effort or take the code for a test drive, feel free to email me or leave a comment below.

I chose to start with just the East-West traffic, and leave the North-South for the next step.

I will share some of my ideas for North-South implementation in upcoming posts, as well as links to the Stackforge project.

If you're interested in joining the effort or take the code for a test drive, feel free to email me or leave a comment below.

SDN Controllers are generally perceived as huge pieces of complex code with hundreds of thousands of lines of code that try to handle everything. It's natural that people would not deem such software to be capable of running "in line". However, this is not necessarily so. As I will demonstrate, the basic SDN Controller code can be separated and deployed in a lean and lightweight manner.

It occurred to me that by combining the well-defined abstraction layers already in Neutron (namely the split into br-tun, br-int and br-ext) and a lightweight SDN controller that can be embedded directly into Neutron, it will be possible to solve the network node bottleneck and the L3 high-availability problems in the virtual overlay network in a simple way.

The functionality of the virtual routes that we should address in our solution is:

We decided to create our controller PoC with Openstack design tenets in mind. Specifically the following:

What we intend to prove is:

It occurred to me that by combining the well-defined abstraction layers already in Neutron (namely the split into br-tun, br-int and br-ext) and a lightweight SDN controller that can be embedded directly into Neutron, it will be possible to solve the network node bottleneck and the L3 high-availability problems in the virtual overlay network in a simple way.

Solution overview

The proposed method is based on the separation of the routing control plane from the data plane. This is accomplished by implementing the routing logic in distributed forwarding rules on the virtual switches. In OpenFlow these rules are called flows. To put this simply, the virtual router is implemented in using OpenFlow flows.The functionality of the virtual routes that we should address in our solution is:

- L3 routing

- ARP response for the router ports

- OAM, like ICMP (ping) and others

We decided to create our controller PoC with Openstack design tenets in mind. Specifically the following:

- Scalability - Support thousands of compute nodes

- Elasticity - Keep controllers stateless to allow for dynamic growth

- Performance - Improve upon DVR

- Reliability - Highly available

- Non intrusive - Rely on the existing abstraction and plug-able module

What we intend to prove is:

- We indeed simplify the DVR flows

- We reduce resource overhead (e.g. bridges, ports, namespaces)

- We remove existing bottlenecks (compared to L3Agent and DVR)

- We improve performance

Reactive vs. Proactive Mode

SDN defines two modes for managing a switch: reactive and proactive.

In our work, We decided to combine these two modes so that we could benefit from the advantages of both modes (although if you believe the FUD about the reactive mode performance, it is quite possible to enhance it to be purely proactive). To learn more about this mixed mode see my previous blog

In our work, We decided to combine these two modes so that we could benefit from the advantages of both modes (although if you believe the FUD about the reactive mode performance, it is quite possible to enhance it to be purely proactive). To learn more about this mixed mode see my previous blog

The Proactive part

In the solution we install a flow pipeline in the OVS br-int in order to offload the handling of L2 and intra-subnet L3 traffic by forwarding these to the NORMAL path (utilizing the hybrid OpenFlow switch). This means that we reuse the built in mechanisms in Neutron for all L2 traffic (i.e. ML2 remains untouched and fully functional) and for L3 traffic that does not need routing (between IPs in the same tenant + subnet).

In addition we use the OVS OpenFlow extensions in order to install an ARP responder for every virtual router port. This is done to offload ARP responses to the compute nodes instead of replying from the controller.The Reactive part

Out of the remaining traffic (i.e. inter-subnet L3 traffic) the only traffic that is handled in a reactive mode is the first packet of inter-subnet communications between VMs or traffic addressed directly to the routers' ports.

This is the flow that controls all traffic in the OVS integration bridge (br-int).

The pipeline works in the following manner:

If you want to try it out, the code plus installation guide are available here.

Again, if you'd like to join the effort, feel free to get in touch.

The following table describes the purpose of each of the pipeline tables:

The Pipeline

Perhaps the most important part of the solution is the OpenFlow pipeline which we install into the integration bridge upon bootstrap.This is the flow that controls all traffic in the OVS integration bridge (br-int).

The pipeline works in the following manner:

- Classify the traffic

- Forward to the appropriate element:

- If it is ARP, forward to the ARP Responder table

- If routing is required (L3), forward to the L3 Forwarding table (which implements a virtual router)

- Otherwise, offload to NORMAL path

In my following posts I will cover in detail the code modifications that were required to support the L3 controller as well as publish a performance study comparing the L3 Agent, DVR and the L3 controller for inter subnet traffic.

If you want to try it out, the code plus installation guide are available here.

Again, if you'd like to join the effort, feel free to get in touch.

detailed explanation of the pipeline

The following diagram shows the multi-table OpenFlow pipeline installed into the OVS integration bridge (br-int) in order to represent the virtual router using flows only: |

| L3 Flows Pipeline |

The following table describes the purpose of each of the pipeline tables:

ID

|

Table Name

|

Purpose

|

0

|

Metadata &

Dispatch |

|

40

|

Classifier

|

|

51

|

ARP Responder

|

|

52

|

L3 Forwarding

|

|

53

|

Public Network

|

|

PoC Implementation brief

For the first release, we used the Open vSwitch (OVS), with OpenFlow v1.3 as the southbound protocol, using the RYU project implementation of the protocol stack as a base library.

The PoC support Route API for IPV4 East -West traffic. In the current PoC

implementation, the L3 controller is embedded into the L3 service plugin.

The PoC support Route API for IPV4 East -West traffic. In the current PoC

implementation, the L3 controller is embedded into the L3 service plugin.