Following my 3-post series about Openstack Juno DVR in detail, this post is a summary of DVR, in my point of view.

In the next post I’m going to present a POC implementation of an embedded L3 controller in Neutron that solves the problem differently. In this post I’d like to explain the motivation for yet another L3 implementation in Neutron.

I believe the L3 Agent drawbacks are pretty clear (centralized bottleneck, limited HA, etc), so in this post I’m going to cover the key benefits and drawbacks of DVR as I see them. So without further ado, let’s start.

Pros

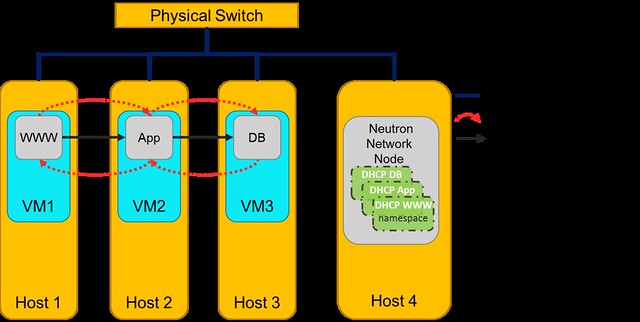

First of all, from a functional point of view, DVR successfully distributed the East-West traffic and the DNAT floating IP, which provided significant offload from the Network node contention. This achieved two key benefits, first is that the failure domains are much smaller (Network node failure only affects SNAT) and the second is scalability due to the distribution of the load on all the compute nodes.

Cons

The approach for DVR’s design was to take the existing centralized router implementation based on Linux network namespaces and clone it on all compute nodes. This is an evolutionary approach and an obvious step to take in distributing L3 from which we’ve learned a lot from. However, it adds load and complexity in three major areas: Management, Performance and Code.

To explain the technical details I will briefly cover two Linux networking constructs used in the solution for East-West communications:

1. Linux network namespaces (which internally load a complete TCP/IP stack with ARP tables and routing tables)

2. OVS flows

OVS flows were needed in order to block the local ARP, redirect return traffic directly to the VMs and to replace the router ports MAC (which cannot be accomplished reasonably using namespaces alone). On the flip side, OVS flows can easily accomplish everything that DVR uses the namespaces for (in East-West communications) and perhaps more importantly it does so in a more efficient way (avoids the overhead of the extra TCP/IP stack etc). To hint at our solution, flows also allow us to further improve the solution by selectively using a reactive approach where relevant.

OVS flows were needed in order to block the local ARP, redirect return traffic directly to the VMs and to replace the router ports MAC (which cannot be accomplished reasonably using namespaces alone). On the flip side, OVS flows can easily accomplish everything that DVR uses the namespaces for (in East-West communications) and perhaps more importantly it does so in a more efficient way (avoids the overhead of the extra TCP/IP stack etc). To hint at our solution, flows also allow us to further improve the solution by selectively using a reactive approach where relevant.

|

| Extra Flows Install for DVR |

So overall it seems that using the Linux network namespace as a black box to emulate a router is an overkill. This approach makes sense in the centralized solution (L3 Agent) where for each tenant’s virtual router we use a single namespace in the DC, however, in the distributed approach where the number of namespaces in the DC is multiplied by the number of compute nodes this needs to be reevaluated.

If you’re not convinced yet, here is a summary of the implications of using this approach:

Resource consumption and Performance

1. DVR adds an additional namespace as well as virtual ports per router on all compute nodes that host routed VMs. This means additional TCP/IP stacks that each and every cross subnet packet traverses. This adds latency and host CPU consumption.

2. ARP tables on all namespaces in the Compute nodes are proactively pre-populated with all the possible entries. Whenever a VM is started, all compute nodes which have running VMs from the same tenant will be updated to keep these tables up to date (which potentially adds latency to VM start time)

3. Flows and routing rules are proactively installed on all compute nodes.

Management complexity

1. Multiple configuration points that need to be synchronized: namespaces, routing tables, flow tables, ARP tables and IPTables.

2. Namespaces on all compute nodes need to be kept in sync all the time with the tenant’s VM ARP tables and routing information and need to be tracked to handle failures etc.

3. The current DVR implementation does not support a reactive mode (i.e. creating a flow just-in-time), thus all possible flows are created on all hosts, even If they’re never used.

Code Complexity

1. The DVR required cross-component changes due to its multi configuration points: The Neutron server Data Model, ML2 Plugin, L3 Plugin , OVS L2 Agent and the L3 Agent.

2. Using all Linux networking constructs in a single solution (namespaces, flows, linux bridges, etc) requiring code that can manage all of it.

3. The solution is tightly coupled with the overlay manager (ML2) which means that the addition of every new type driver (today only vxlan) requires code additions on all levels (as can be seen by the vlan patches)

Resource consumption and Performance

1. DVR adds an additional namespace as well as virtual ports per router on all compute nodes that host routed VMs. This means additional TCP/IP stacks that each and every cross subnet packet traverses. This adds latency and host CPU consumption.

2. ARP tables on all namespaces in the Compute nodes are proactively pre-populated with all the possible entries. Whenever a VM is started, all compute nodes which have running VMs from the same tenant will be updated to keep these tables up to date (which potentially adds latency to VM start time)

3. Flows and routing rules are proactively installed on all compute nodes.

Management complexity

1. Multiple configuration points that need to be synchronized: namespaces, routing tables, flow tables, ARP tables and IPTables.

2. Namespaces on all compute nodes need to be kept in sync all the time with the tenant’s VM ARP tables and routing information and need to be tracked to handle failures etc.

3. The current DVR implementation does not support a reactive mode (i.e. creating a flow just-in-time), thus all possible flows are created on all hosts, even If they’re never used.

Code Complexity

1. The DVR required cross-component changes due to its multi configuration points: The Neutron server Data Model, ML2 Plugin, L3 Plugin , OVS L2 Agent and the L3 Agent.

2. Using all Linux networking constructs in a single solution (namespaces, flows, linux bridges, etc) requiring code that can manage all of it.

3. The solution is tightly coupled with the overlay manager (ML2) which means that the addition of every new type driver (today only vxlan) requires code additions on all levels (as can be seen by the vlan patches)

In my next post I will present an alternative solution, we evaluated and developed (code available) for distributing the virtual router that attempts to overcome these limitations, using SDN technologies.

Comments, questions and corrections are welcome.

Comments, questions and corrections are welcome.

Thanks Eran, we're going through your posts on DVR in my team and we pretty much agree with your conclusion. Its total sum of complexity is ... hard to ignore ...

ReplyDelete